Skip to main content

Browse Issues

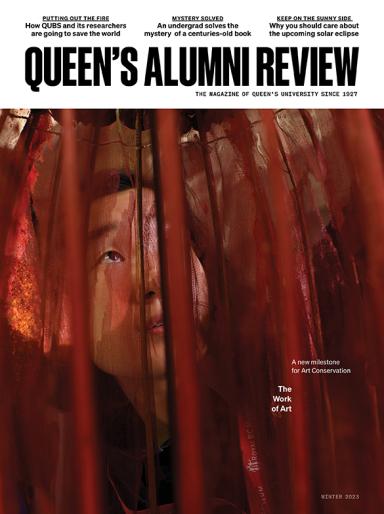

2023

2022

2021

2020

2019

Fall 2019

Summer 2019

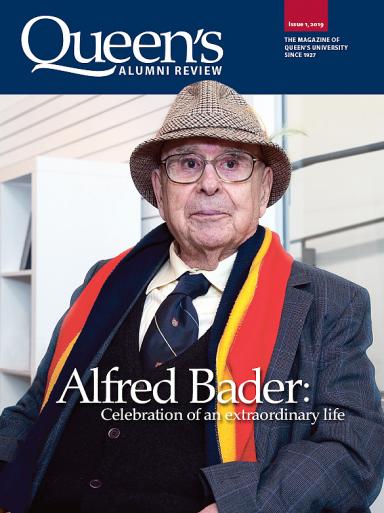

Spring 2019

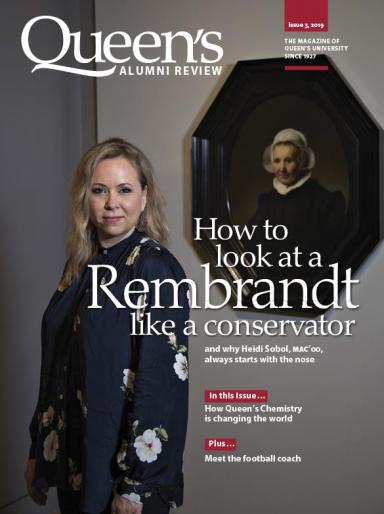

Winter 2019

2018

Fall 2018

Summer 2018

Spring 2018

Winter 2018

2017

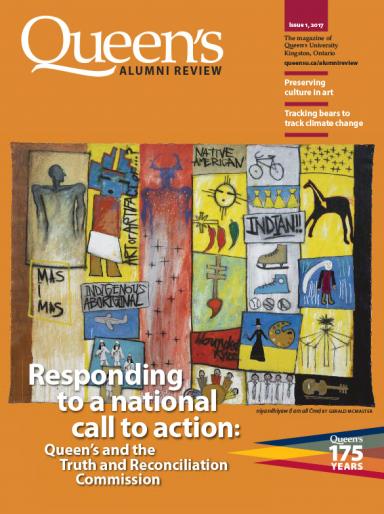

Fall 2017

Summer 2017

Spring 2017

Winter 2017

2016

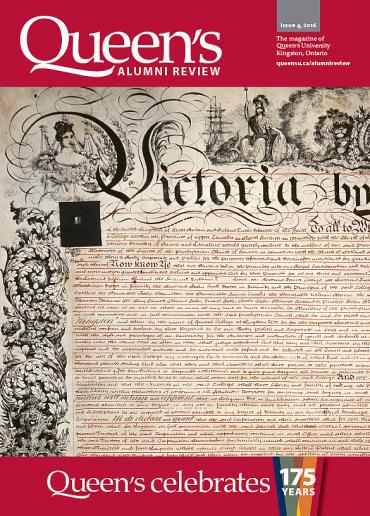

Fall 2016

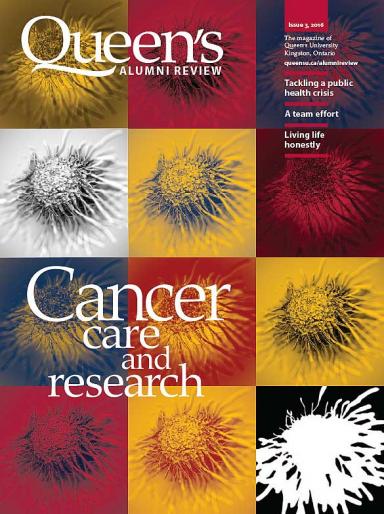

Summer 2016

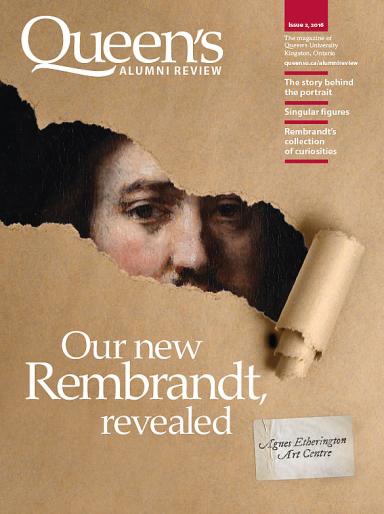

Spring 2016

Winter 2016

2015

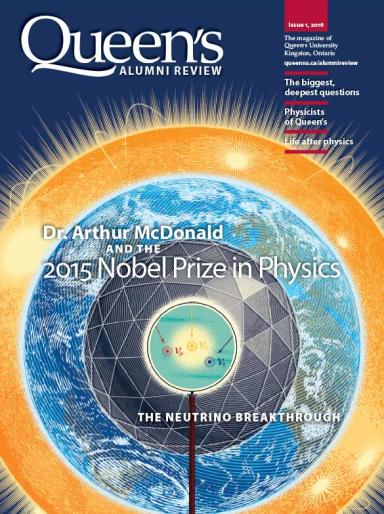

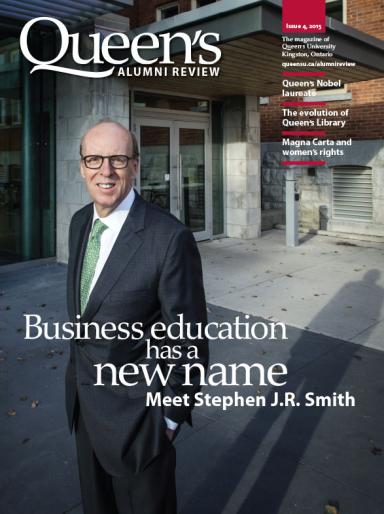

Fall 2015

Summer 2015

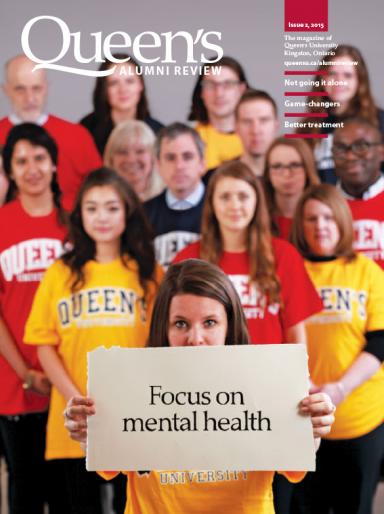

Spring 2015

Winter 2015

2014

Fall 2014

Summer 2014

Spring 2014

Winter 2014

2013

Fall 2013

Summer 2013

Spring 2013

Winter 2013

2012

Fall 2012

Summer 2012

Spring 2012

Winter 2012

2011

Fall 2011

Summer 2011

Spring 2011

Winter 2011

2010

Fall 2010

Summer 2010

Spring 2010

Winter 2010

2009

Fall 2009

Summer 2009

Spring 2009