Improving reproducibility in psychology

August 27, 2015

Share

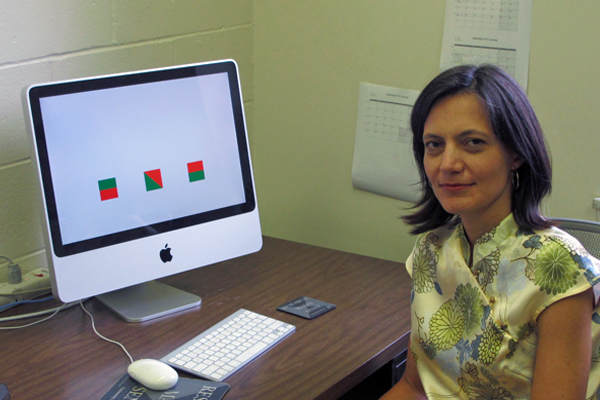

Queen’s University developmental psychology professor Stanka Fitneva has co-authored a study in the journal Science that, for the first time, explores the replicability of psychology research.

The Reproducibility Project: Psychology, launched nearly four years ago, is one of the first crowdsourced research studies in the field. The researchers’ most important finding was that, regardless of the analytic method or criteria used, fewer than half of their studies produced the same findings as the original study.

“This is a unique project in psychology, and maybe in all of science," says Dr. Fitneva. "It's the first crowdsourcing project where a number of labs from universities all around the world are involved in an effort to see to what extent findings that are published in major journals can be replicated by independent labs."

The 270 researchers in the study, at facilities around the globe, re-examined studies from the 2008 issues of Psychological Science, Journal of Personality and Social Psychology and Journal of Experimental Psychology: Learning, Memory, and Cognition. Efforts were made to ensure the team re-evaluating a study had relevant research expertise, to reduce the likelihood of error. The teams then attempted to reproduce the results of the study.

Reproducibility means that the results recur when the same data are analyzed again, or when new data are collected using the same methods. While the project hypothesized a reproducibility rate approaching 80 per cent, the authors were surprised to discover that less than half of the target findings were reproduced.

Dr. Fitneva's team attempted to reproduce the results of an earlier study into the effects of language on children’s object representation. The previous study found that children were more likely to remember an object when the description of its features included direction (i.e. the red part is to the left of the green part) than when it did not (i.e. the red part is touching the green part). Dr. Fitneva's study utilized the materials provided by the authors and a larger sample size. The replication identified several factors that appear to have been excluded from consideration in the original study and may circumscribe the effect.

Dr. Fitneva and her co-authors propose three possible reasons for the surprising lack of reproducibility they encountered: small differences in how the studies were carried out; a random chance failure to detect the original result; or the possibility the original itself was a false positive. In addition, they highlight another possibility – the preeminence placed on new and innovative discoveries has incentivized researchers to aim for "new" rather than "reproducible" findings.

"Publication bias in science is a major issue and, in the last couple of years, more and more has surfaced about the detrimental consequences of this bias," says Dr. Fitneva. "Just like in any aspect of human activity, there are incentives that influence the conduct of research. Our journals have been prioritizing the publication of, and thus rewarding researchers for, novel and surprising findings."

"When we find something surprising it catches the imagination of the public and the media just as much as it catches the imagination of researchers and journal editors. We need to balance the verification processes in science against the drive for innovation. Assessing the reproducibility of findings is essential for scientific progress but currently researchers receive few rewards for engaging in this practice," says Dr. Fitneva.

The full results of the Reproducibility Project: Psychology have been published in the journal Science.